Vocabulary and Language Model Adaptation for Automatic Speech Recognition

The accuracy of an ASR system is measured by the percentage of correctly recognized words from a reference (manual) transcript. Traditional (hybrid) ASR systems aim to achieve the best accuracy via the cooperation of three components: an acoustic model, a pronunciation model, and a language model (LM). The basics and functions of these models were covered in a previous blog post. In this episode, we take a look at the LM in more detail.

In a typical ASR system featuring a fixed vocabulary and a word n-gram LM, the words which are previously unseen to the system have no chance of appearing in the automatic transcription. For example, words like “Brexit” or “COVID”, which did not exist some years ago, cannot be recognized with older systems, even though they are very frequent in speech today. This is a major challenge for applications like broadcast-news transcription, in which a new word is introduced to the public almost on a daily basis (names of new politicians, foreign locations, etc.). This is also valid for criminal investigations, in which the suspects may be obscuring the intended meaning of words by using them out of their context, or choosing code names and abbreviations which do not exist in reality. End-users such as law enforcement authorities (LEAs), who are aiming to correctly recognize such words, need to ensure that the words/jargon they are interested in are indeed present in the vocabulary and that the LM is well trained with those words in context.

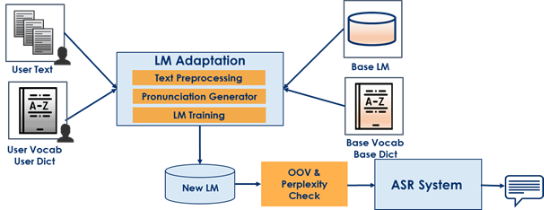

The LM adaptation component which will soon be part of the ROXANNE solution aims to tackle this problem by giving the end-users the opportunity to adapt existing language models with new data, or to build new language models from scratch in a semi-automatic way. The vocabulary and LM adaptation process is depicted in the following Figure 1.

Figure 1: Language model adaptation in ROXANNE.

The ASR component in ROXANNE comes with a language-specific “base” LM, together with a matching vocabulary/dictionary. The base LM is typically a 3-gram model which is trained by millions of words of running text and contains the occurrence probabilities of words (unigrams), and their duplet (bigram) and triplet (trigram) combinations in the language of interest. The vocabulary/dictionary, which is sometimes also called the lexicon, complements the LM with the list of unique spellings occurring in the training data and their pronunciations. The pronunciation consists of a sequence of phonemes (distinct sound representations) that are picked from the phonetic inventory for that language. Phonemes are the distinct sounds occurring in a particular language and are commonly described in terms of the International Phonetic Alphabet (IPA). Different languages typically make use of different sets of phoneme inventories.

Let’s now have a look at the stages of vocabulary and LM adaptation as presented in Figure 1:

The process is initiated by the user inputting some text (e.g., documents, messages) into the system. The user can optionally also add a list of words or phrases (the “vocabulary” — this could be a manually derived list as well as the output of NLP components) and their pronunciations in the language of interest (the “dictionary”). From a LEA’s operational point of view, the input text can be documents, reports, messages, manual transcripts of previously intercepted calls, etc., and the vocabulary can be the list of words of interest, such as the codewords, nicknames, names of suspects, names of locations and organisations, etc.

The next stage, LM adaptation, can be broadly divided into three steps. In the first step, the following preprocessing operations are applied on the input text:

Normalization: punctuation marks are removed and words are transformed into their natural case. Non-body text (e.g., HTML tags, header and footers) and words written with a different script are discarded.

Number handling: numeral forms in the text are converted into their literal forms. For example, “-2°C temperature and 82% humidity were measured on December 16, 2021” becomes “minus two degrees Celsius and eighty five percent humidity were measured on December sixteenth two thousand twenty one”.

Spelling handling: compound words are de-compounded, detected abbreviations are marked.

In the second, pronunciation generation step, words of the preprocessed text are compared against those constituting the “base” LM, vocabulary and dictionary. The new words which do not appear in the dictionary (also called OOV - out-of-vocabulary words) receive proper pronunciation according to the language-specific phonetic transcription rules. The system is aware of the fact that words with different spellings may share the same pronunciation (e.g., “If you write to Mr. Wright, he will give you the right answer.”) or words with the same spelling might be pronounced differently (e.g., “I am coming at 1 AM.”). In addition, it can automatically detect and distinguish between the two types of abbreviations: initialisms (e.g., FBI) and acronyms (e.g., NATO). The user also has the option to provide alternative pronunciations to the system, especially for foreign words, accented speech, and local variations (e.g., either).

Finally in the LM training step, a new LM model is computed based on the input data, possibly also interpolated with the base model. The system then takes this LM and deploys it into the ASR engine in order to transcribe further audio files. A quick check for the integrity can be optionally applied on the new model to make sure that it will provide better accuracy than the previous one.

The LM adaptation component in the ROXANNE solution is based on the Language Model Toolkit, a tool which is developed by HENSOLDT Analytics and is operational for 10 languages as part of their automatic transcription software, the Media Mining Indexer[1].

[1] E. Dikici, G. Backfried, J. Riedler, “The SAIL LABS Media Mining Indexer and the CAVA Framework”, INTERSPEECH 2019, pp.4630-4631, (2019).