Entity Recognition and how it can help LEAs

The task of looking at a piece of text and identifying important “entities” in it is formally defined Named Entity Recognition in Language processing. The extent of what can qualify as a named entity is largely in the control of users and data. The most common named entities found in textual content are Persons, Places and Organizations. There can also be other entities like monetary values, dates and other temporal expressions. Normally, the task is carried out by a Natural Language Unit (NLU) in a word-tagging fashion. That is, the NLU looks at every word in a target piece of text and labels it an entity or otherwise. When it is done scrolling through the entire piece of text, we can extract all words that were tagged as named entities.

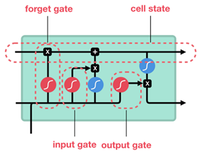

The NLU used for this task is called a Named Entity Recognition (NER) module. The construction of NER modules is one of the primal tasks in Natural Language Processing. Major advancements in neural networks has pushed the efficacy of NER modules considerably. The development of Recurrent Neural Networks (RNNs) has been particularly significant in this context. Recurrent neural networks are neural networks that can process information sequentially, preserving the temporal aspect of data. Very much like humans, they process words one by one, in the order that they appear in the text. This essentially means that when the model is processing a token, it is aware of the words that came before it but oblivious to the words and the text that lies ahead. The sequential progression in processing data gives the module a better understanding of language, which in-turn improves label predictions. A notable candidate in this regard is the Long Short Term Memory (LSTM) [1] unit, which is a modified version of a typical RNN with a cell gate and gate operations to control the information in it. Basically, the cell state is a vector representation of the context that the model needs to know when making a prediction at one token position. It is continually updated after each processed token by adding or removing information as required. This approach was seen to work well and provided much better results in comparison to vanilla RNNs which are state-free and can struggle with long range dependency.

![Figure 2: Attention Mechanism in Transformer [4] Picture1.png](https://roxanne-euproject.org/news/picture1.png/@@images/3d9fed53-9c3f-453c-b067-9c1344c526a8.png)

Figure 1: Illustration of an LSTM Model [2]

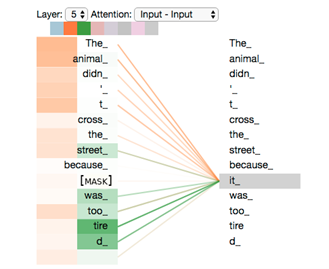

The advent of the attention-based Transformer [3] models has resonated in most research domains within AI. Transformers work on global attention rather than recurrence. In the Natural Language Processing (NLP) context, this means that the model is allowed to see words that precede and follow the current word it is processing. Moreover, the model can choose how much attention it provides to each word around the target word. This method really puts things in “context”, since the model can acutely observe the context that a word appears in and can direct appropriate attention to the portions of the context that matter when making predictions on that particular word. Note that the RNN model was causal: it could only observe the words that come beforehand and not “look further”. Intuitively, it makes sense when one can observe both sides of the context when one decides if a token is a named entity or not. The transformer models are first “pre-trained” on the target language before they are used for NER. This means that the models are initially given simpler tasks to do to improve their understanding of language. A common pre-training method is Masked Language Modelling, where one word in a sentence is masked and the transformer is asked to predict the word that is masked. This is very similar to the “fill the blanks” questions that children are tested in school. The transformer observes the context of the masked word (on both sides, thanks to the attention mechanism) and tries to predict what word could replace the mask. This kind of pre-training is done with billions of pieces of text, at which point the model becomes very good at predicting the masked token and consequentially ends up understanding the semantics and structure of the language.

Figure 2: Attention Mechanism in Transformer [4]

Once it understands the basic semantic of the language through pre-training, it is taught simpler tasks like NER. We call this “fine-tuning”. Intuitively, this process is very similar to how language education is imparted in real life: Teach the language first, train on specific tasks later. Needless to say, this approach outperforms RNNs in almost all NLP tasks, including NER.

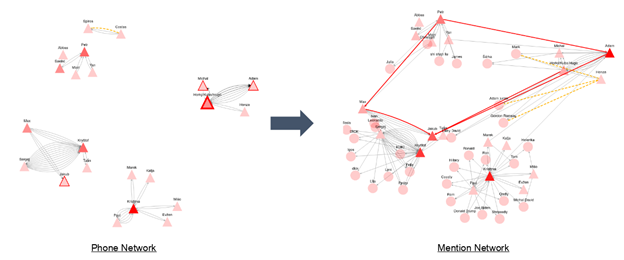

Coming back to the criminal domain where we originally started, the detection of Named entities can help the LEAs pick out words and tokens they are interested in. With ROXANNE, one use case of NER is to forge contacts and connections between people. The researchers at Saarland University have developed what we call a “Mention Network” in a scenario of criminal conversations over the phone. Their work is based on the idea that people mentioned in a phone call are henceforth potential contacts of the speakers. A conversation that happens over the phone is processed using a NER module, which looks specifically for other people mentioned in the phone call. The NER module itself is a pre-trained Transformer fine tuned on named entity detection. This information is used to supplement a phone network with additional nodes and edges. The data is now available for the LEA to scrutinize.

Figure 3: Illustration of the mention network

References:

[1] Hochreiter, Sepp, and Jürgen Schmidhuber. "Long short-term memory." Neural computation9.8 (1997): 1735-17802

[3] Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems 30 (2017)

[4] Original Figure from https://jalammar.github.io/illustrated-transformer/; Edited for illustration.